[6]:34. (D,E) Convergence of observed dependence (D) and spiking discontinuity (E) learning rule to confounded network (c = 0.5). Variance: how much would your model t vary from sample to sample? {\displaystyle (y-{\hat {f}}(x;D))^{2}}

+ Similarly, a larger training set tends to decrease variance. This may be communicated by neuromodulation. Looking forward to becoming a Machine Learning Engineer?

The spiking discontinuity approach requires that H is an indicator functional, simply indicating the occurrence of a spike or not within window T; it could instead be defined directly in terms of Z.

Bias refers to the error that is introduced by approximating a real-life problem with a simplified model. In other words, test data may not agree as closely with training data, which would indicate imprecision and therefore inflated variance. Algorithms with high bias tend to be rigid. = This is a compelling account of learning in birdsong, however it relies on the specific structural form of the learning circuit. How else could neurons estimate their causal effect? bias low, variance low. ) Bias is the error that arises from assumptions made in the learning The causal effect of neuron i on reward R is defined as: (D) s as a function of window size T and synaptic time constant s. ( = ( For instance, a model that does not match a data set with a high bias will create an inflexible model with a low variance that results in a suboptimal machine learning model. This is because, with such a setup, the neuron does not need to distinguish between barely-above-threshold inputs and well-above-threshold inputs, which may be challenging. As we can see, the model has found no patterns in our data and the line of best fit is a straight line that does not pass through any of the data points. Artificial neural networks solve this problem with the back-propagation algorithm. , Selecting the correct/optimum value of will give you a balanced result. The authors would like to thank Roozbeh Farhoodi, Ari Benjamin and David Rolnick for valuable discussion and feedback. Here we presented the first exploration of the idea that neurons can perform causal inference using their spiking mechanism. x (9) For low correlation coefficients, representing low confounding, the observed dependence estimator has a lower error. Maximum Likelihood Estimation 6. ( D Writing review & editing, Affiliations In fact, in past models and experiments testing voltage-dependent plasticity, changes do not occur when postsynaptic voltages are too low [57, 58]. The option to select many data points over a broad sample space is the ideal condition for any analysis. In order to identify these time periods, the method uses the maximum input drive to the neuron: (10)

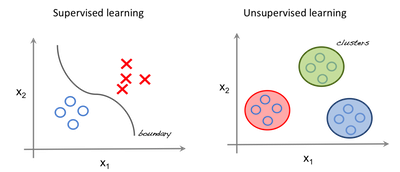

In artificial neural networks, the credit assignment problem is efficiently solved using the backpropagation algorithm, which allows efficiently calculating gradients. A learning algorithm with low bias must be "flexible" so that it can fit the data well. WebBias and variance are used in supervised machine learning, in which an algorithm learns from training data or a sample data set of known quantities. It is impossible to have a low bias and low variance ML model. Statistically, the symmetric choice is the most sensible default. These assumptions are supported numerically (Fig 6). Web14.1 Unsupervised Learning; 14.2 K-Means Clustering; 14.3 K-Means Algorithm; 14.4 K-Means Example; 14.5 Hierarchical Clustering; 15 Dimension Reduction. y , {\displaystyle {\hat {f}}(x;D)}  y A model with a higher bias would not match the data set closely. Varying N allows us to study how the method scales with network size. as well as possible, by means of some learning algorithm based on a training dataset (sample) If neurons perform something like spiking discontinuity learning we should expect that they exhibit certain physiological properties. k

y A model with a higher bias would not match the data set closely. Varying N allows us to study how the method scales with network size. as well as possible, by means of some learning algorithm based on a training dataset (sample) If neurons perform something like spiking discontinuity learning we should expect that they exhibit certain physiological properties. k

https://doi.org/10.1371/journal.pcbi.1011005.g005. Let vi(t) denote the membrane potential of neuron i at time t, having leaky integrate-and-fire dynamics: You can measure the resampling variance and bias using the average model metric that's calculated from the different versions of your data set. It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company interview Questions. In this way the spiking discontinuity may allow neurons to estimate their causal effect. When making

When R is a deterministic, differentiable function of S and s 0 this recovers the reward gradient and we recover gradient descent-based learning. Indeed, this exact approach is taken by [43]. Having validated spiking discontinuity-based causal inference in a small network, we investigate how well we can estimate causal effects in wider and deeper networks. Lets convert categorical columns to numerical ones. {\displaystyle x_{1},\dots ,x_{n}} A common strategy is to replace the true derivative of the spiking response function (either zero or undefined), with a pseudo-derivative.

Plots show the causal effect of each of the first hidden layer neurons on the reward signal. HTML5 video. Thus spiking discontinuity is most applicable in irregular but synchronous activity regimes [26]. This is called Overfitting., Figure 5: Over-fitted model where we see model performance on, a) training data b) new data, For any model, we have to find the perfect balance between Bias and Variance. On the other hand, by being simple the estimates are not too much affected/influenced by the trained data, so from sample to sample, the estimates do not vary a lot; that is, the estimates in a simple model have low variance. A number of replacements have been explored: [44] uses a boxcar function, [47] uses the negative slope of the sigmoid function, [45] explores the so-called straight-through estimatorpretending the spike response function is the identity function, with a gradient of 1. Verification, Terms We consider the activity of a simulated network of n neurons whose activity is described by their spike times, : The neurons obey leaky integrate-and-fire (LIF) dynamics Capacity, Overfitting and Underfitting 3. The causal effect in the correlated inputs case is indeed close to this unbiased value.  2

2

We can see that there is a region in the middle, where the error in both training and testing set is low and the bias and variance is in perfect balance., , Figure 7: Bulls Eye Graph for Bias and Variance. When the errors associated with testing data increase, it is referred to as high variance, and vice versa for low variance. after making the substitution si si + s in the first term.

Note that variance is associated with Testing Data while bias is associated with Training Data. The overall error associated with testing data is termed a variance. WebThe relationship between bias and variance is inverse. [13][14] For example, boosting combines many "weak" (high bias) models in an ensemble that has lower bias than the individual models, while bagging combines "strong" learners in a way that reduces their variance. Note that bias and variance typically move in opposite directions of each other; increasing bias will usually lead to lower variance, and vice versa. f It is well known there are many neuromodulators which may represent reward or expected reward, including dopaminergic neurons from the substantia nigra to the ventral striatum representing a reward prediction error [25, 38]. [65]). https://doi.org/10.1371/journal.pcbi.1011005, Editor: Ulrik R. Beierholm, Answer: Supervised learning involves training a model on labeled data, where the {\displaystyle x\sim P} The causal effect i is an important quantity for learning: if we know how a neuron contributes to the reward, the neuron can change its behavior to increase it. Please provide your suggestions/feedback at this link: click here. The neurons receive a shared scalar input signal x(t), with added separate noise inputs i(t), that are correlated with coefficient c. Each neuron weighs the noisy input by wi.

In the above equation, Y represents the value to be predicted. n Regression discontinuity design, the related method in econometrics, has studied optimizing the underlying kernel, which may not be symmetric depending on the relevant distributions.

{\displaystyle x_{i}} You can connect with her on LinkedIn. Many of these methods use something like the REINFORCE algorithm [39], a policy gradient method in which locally added noise is correlated with reward and this correlation is used to update weights. Any difference in observed reward can therefore only be attributed to the neurons activity. There are two main types of errors present in any machine learning model. It also requires full knowledge of the system, which is often not the case if parts of the system relate to the outside world. In particular, we primarily presented empirical results demonstrating the idea in numerous settings. This is further skewed by false assumptions, noise, and outliers. Yet, despite these ideas, we may still wonder if there are computational benefits of spikes that balance the apparent disparity in the learning abilities of spiking and artificial networks. It effectively estimates the causal effect in a spiking neural network with two hidden layers (Fig 5B). This will cause our model to consider trivial features as important., , Figure 4: Example of Variance, In the above figure, we can see that our model has learned extremely well for our training data, which has taught it to identify cats. The estimated is then used to update weights to maximize expected reward in an unconfounded network (uncorrelated noisec = 0.01). (1) Models with a high bias and a low variance are consistent but wrong on average. Decreasing the value of will solve the Underfitting (High Bias) problem. y

When a confounded network (correlated noisec = 0.5) is used the spike discontinuity learning exhibits similar performance, while learning based on the observed dependence sometimes fails to converge due to the bias in gradient estimate. It will capture most patterns in the data, but it will also learn from the unnecessary data present, or from the noise. Larger time windows and longer time constants lower the change in Si due to a single spike. Softmax output above 0.5 indicates a network output of 1, and below 0.5 indicates 0. A one-order-higher model of the reward adds a linear correction, resulting in the piece-wise linear model of the reward function: Trying to put all data points as close as possible.

h and x), then there is also some statistical dependence between these variables in the aggregated variables. {\displaystyle f(x)} This feature of simple models results in high bias.

allows us to update the weights according to a stochastic gradient-like update rule: (A) Graphical model describing neural network. The more complex the model , (

Conceptualization, A Computer Science portal for geeks. In contrast, the spiking discontinuity error is more or less constant as a function of correlation coefficient, except for the most extreme case of c = 0.99. i

Suppose that we have a training set consisting of a set of points (E,F) Approximation to the reward gradient overlaid on the expected reward landscape. The discontinuity-based method provides a novel and plausible account of how neurons learn their causal effect. The true causal effect was estimated from simulations with zero noise correlation and with a large window sizein order to produce the most accurate estimate possible. LIF neurons have refractory period of 1,3 or 5 ms. Error is comparable for different refractory periods. And it is this difference in network state that may account for an observed difference in reward, not specifically the neurons activity. The input drive is used here instead of membrane potential directly because it can distinguish between marginally super-threshold inputs and easily super-threshold inputs, whereas this information is lost in the voltage dynamics once a reset occurs. that generalizes to points outside of the training set can be done with any of the countless algorithms used for supervised learning. https://doi.org/10.1371/journal.pcbi.1011005.g002, Instead, we can estimate i only for inputs that placed the neuron close to its threshold. In Unsupervised Learning, the machine uses unlabeled data and learns on itself without any supervision. That is, there is a sense in which:

When performance is sub-optimal, the brain needs to decide which activities or weights should be different. } This internal variable is combined with a term to update synaptic weights. Its actually a mathematical property of the algorithm that is acting on the data. Finding an Increasing the value of will solve the Overfitting (High Variance) problem. Causal effects are formally defined in the context of a certain type of probabilistic graphical modelthe causal Bayesian networkwhile a spiking neural network is a dynamical, stochastic process. This is called Bias-Variance Tradeoff. In this work we show that the discontinuous, all-or-none spiking response of a neuron can in fact be used to estimate a neurons causal effect on downstream processes. ) In contrast, algorithms with high bias typically produce simpler models that may fail to capture important regularities (i.e. To test the ability of spiking discontinuity learning to estimate causal effects in deep networks, the learning rule is used to estimate causal effects of neurons in the first layer on the reward function R. https://doi.org/10.1371/journal.pcbi.1011005.s001. This can be done either by increasing the complexity or increasing the training data set. However, if being adaptable, a complex model \(\hat{f}\) tends to vary a lot from sample to sample, which means high variance. More specifically, in a spiking neural network, the causal effect can be seen as a type of finite difference approximation of the partial derivative (reward with a spike vs reward without a spike). Variance refers to how much the target function's estimate will fluctuate as a result of varied training data. That is, over each simulated window of length T: Being fully connected in this way guarantees that we can factor the distribution with the graph and it will obey the conditional independence criterion described above. ,

Enroll in Simplilearn's AIML Course and get certified today.

At the end of a trial period T, the neural output determines a reward signal R. Most aspects of causal inference can be investigated in a simple, few-variable model such as this [32], thus demonstrating that a neuron can estimate a causal effect in this simple case is an important first step to understanding how it can do so in a larger network. the App, Become Funding acquisition,

N WebUnsupervised Learning Convolutional Neural Networks (CNN) are a type of deep learning architecture specifically designed for processing grid-like data, such as images or time-series data. We are still seeking to understand how biological neural networks effectively solve this problem. That is, let Zi be the maximum integrated neural drive to the neuron over the trial period. In Machine Learning, error is used to see how accurately our model can predict on data it uses to learn; as well as new, unseen data. ( The biasvariance dilemma or biasvariance problem is the conflict in trying to simultaneously minimize these two sources of error that prevent supervised learning algorithms In this way the causal effect is a relevant quantity for learning. (5)

Data Availability: All python code used to run simulations and generate figures is available at https://github.com/benlansdell/rdd. significantly slower than backpropagation. x

{\displaystyle {\hat {f}}(x;D)} (A) Parameters for causal effect model, u, are updated based on whether neuron is driven marginally below or above threshold. i https://doi.org/10.1371/journal.pcbi.1011005.g001. For clarity, other neurons Z variables have been omitted from this graph. Spiking neural networks generally operate dynamically where activities unfold over time, yet supervised learning in an artificial neural network typically has no explicit dynamicsthe state of a neuron is only a function of its current inputs, not its previous inputs. If considered as a gradient then any angle well below ninety represents a descent direction in the reward landscape, and thus shifting parameters in this direction will lead to improvements. Furthermore, this allows users to increase the complexity without variance errors that pollute the model as with a large data set. We assume that there is a function f(x) such as (B) This offset, s, is independent of firing rate and is unaffected by correlated spike trains. Given this causal network, we can then define a neurons causal effect. x

{\displaystyle {\hat {f}}(x;D)} (A) Parameters for causal effect model, u, are updated based on whether neuron is driven marginally below or above threshold. i https://doi.org/10.1371/journal.pcbi.1011005.g001. For clarity, other neurons Z variables have been omitted from this graph. Spiking neural networks generally operate dynamically where activities unfold over time, yet supervised learning in an artificial neural network typically has no explicit dynamicsthe state of a neuron is only a function of its current inputs, not its previous inputs. If considered as a gradient then any angle well below ninety represents a descent direction in the reward landscape, and thus shifting parameters in this direction will lead to improvements. Furthermore, this allows users to increase the complexity without variance errors that pollute the model as with a large data set. We assume that there is a function f(x) such as (B) This offset, s, is independent of firing rate and is unaffected by correlated spike trains. Given this causal network, we can then define a neurons causal effect. x

(A) Simulated spike trains are used to generate Si|Hi = 0 and Si|Hi = 1. If is the neurons spiking threshold, then a maximum drive above results in a spike, and below results in no spike. Bias and variance are just descriptions for the two ways that a model can give subpar results. , \(\text{nox} = f(\text{dis}, \text{zn})\), Machine Learning for Data Science (Lecture Notes). In this paper SDE based learning was explored in the context of maximizing a reward function or minimizing a loss function.

If you are facing any difficulties with the new site, and want to access our old site, please go to https://archive.nptel.ac.in. Reward-modulated STDP (R-STDP) can be shown to approximate the reinforcement learning policy gradient type algorithms described above [50, 51]. The activity contributes to reward R. Though not shown, this relationship may be mediated through downstream layers of a neural network, and complicated interactions with the environment. Call this naive estimator the observed dependence. First, assuming the conditional independence of R from Hi given Si and Qji: SDE-based learning, on its own, is not a learning rule that is significantly more efficient than REINFORCE, instead it is a rule that is more robust to the structure of noise that REINFORCE-based methods utilize. Copy this link and share it with your friends, Copy this link and share it with your Comparing the average reward when the neuron spikes versus does not spike gives a confounded estimate of the neurons effect. Bias is the difference between our actual and predicted values. (C) If H1 and H2 are independent, the observed dependence matches the causal effect.

= Bias is a phenomenon that occurs in the machine learning model wherein an algorithm is used and it does not fit properly. WebThis results in small bias. When an agent has limited information on its environment, the suboptimality of an RL algorithm can be decomposed into the sum of two terms: a term related to an asymptotic bias and a term due to overfitting. We need to give more details for the wide network (Fig 5A) simulations. The simulations for Figs 3 and 4 are about standard supervised learning and there an instantaneous reward is given by . 1 Importantly, neither activity of upstream neurons, which act as confounders, nor downstream non-linearities bias the results.

To consider the effect of a single spike, note that unit i spiking will cause a jump in Si compared to not spiking (according to synaptic dynamics).

: we want We simulate a single hidden layer neural network of varying width (Fig 5A; refer to the Methods for implementation details).

underfit) in the data. Her specialties are Web and Mobile Development. [18], Even though the biasvariance decomposition does not directly apply in reinforcement learning, a similar tradeoff can also characterize generalization. ) directed) learning, we have an input x x, based on which we try to predict the output y y. ) again for all time periods at which zi,n is within p of threshold . High-variance learning methods may be able to represent their training set well but are at risk of overfitting to noisy or unrepresentative training data. Learning Algorithms 2. Please let us know by emailing blogs@bmc.com. Generally, your goal is to keep bias as low as possible while introducing acceptable levels of variances. ( (3) Reward-modulated STDP (R-STDP) can be shown to ) ) Minh Tran 52 Followers for maximum input drive obtained over a short time window, Zi, and spiking threshold, ; thus, Zi < means neuron i does not spike and Zi means it does. To create an accurate model, a data scientist must strike a balance between bias and variance, ensuring that the model's overall error is kept to a minimum. The neurons receive inputs from an input layer x(t), along with a noise process j(t), weighted by synaptic weights wij. See an error or have a suggestion? The results in this model exhibit the same behavior as that observed in previous sectionsfor sufficiently highly correlated activity, performance is better for a narrow spiking discontinuity parameter p (cf. a In addition, one has to be careful how to define complexity: In particular, the number of parameters used to describe the model is a poor measure of complexity.

) subscript on our expectation operators. Since they are all linear regression algorithms, their main difference would be the coefficient value. All these contribute to the flexibility of the model. The term variance relates to how the model varies as different parts of the training data set are used. Thus R-STDP can be cast as performing a type of causal inference on a reward signal, and shares the same features and caveats as outlined above. No, Is the Subject Area "Sensory perception" applicable to this article? In the data, we can see that the date and month are in military time and are in one column. Because of the fact that the aggregated variables maintain the same ordering as the underlying dynamic variables, there is a well-defined sense in which R is indeed an effect of Hi, not the other way around, and therefore that the causal effect i is a sensible, and not necessarily zero, quantity (Fig 1C and 1D). Copyright: 2023 Lansdell, Kording. Given this, we may wonder, why do neurons spike? Dashed lines show the observed-dependence estimator, solid lines show the spiking discontinuity estimator, for correlated and uncorrelated (unconfounded) inputs, over a range of window sizes p. The observed dependence estimator is significantly biased with confounded inputs. (New to ML?  15.1 Curse of {\displaystyle N_{1}(x),\dots ,N_{k}(x)} [53]).

15.1 Curse of {\displaystyle N_{1}(x),\dots ,N_{k}(x)} [53]).

The same applies when creating a low variance model with a higher bias. It turns out that whichever function \text{prediction/estimate:}\hspace{.6cm} \hat{y} &= \hat{f}(x_{new}) \nonumber \\ This means we can estimate from. x Yes WebThis results in small bias. { n languages for free.

( Figure 14 : Converting categorical columns to numerical form, Figure 15: New Numerical Dataset. Eventually, we plug these 3 formulas in our previous derivation of About the clustering and association unsupervised learning problems. e1011005. The observed dependence is biased by correlations between neuron 1 and 2changes in reward caused by neuron 1 are also attributed to neuron 2. https://doi.org/10.1371/journal.pcbi.1011005.g003. Its ability to discover similarities and differences in information make it the ideal solution for The latter is known as a models generalisation performance. This happens when the Variance is high, our model will capture all the features of the data given to it, including the noise, will tune itself to the data, and predict it very well but when given new data, it cannot predict on it as it is too specific to training data., Hence, our model will perform really well on testing data and get high accuracy but will fail to perform on new, unseen data. The graph is directed, acyclic and fully-connected. Equation 1: Linear regression with regularization. That is, since we assume that R is function of some filtered neural output of the network, then it can be shown that the causal effect, i, under certain assumptions, does indeed approximate the reward gradient . , has zero mean and variance In this section we discuss the concrete demands of such learning and how they relate to past experiments. where integrate and fire means simply: Importantly, this finite-difference approximation is exactly what our estimator gets at. These results show spiking discontinuity can estimate causal effects in both wide and deep neural networks. Of course, given our simulations are based on a simplified model, it makes sense to ask what neuro-physiological features may allow spiking discontinuity learning in more realistic learning circuits. The resulting heuristics are relatively simple, but produce better inferences in a wider variety of situations.[20]. a trivial functional fR(r) = 0 would destroy any dependence between X and R. Given these considerations, for the subsequent analysis, the following choices are used: This is because model-free approaches to inference require impractically large training sets if they are to avoid high variance. Such perturbations come at a cost, since the noise can degrade performance. WebThe bias-variance tradeoff is a particular property of all (supervised) machine learning models, that enforces a tradeoff between how "flexible" the model is and how well it performs on unseen data. Simulating this simple two-neuron network shows how a neuron can estimate its causal effect using the SDE (Fig 3A and 3B). We found that the asymmetric estimator performs worse when using the piecewise constant estimator of causal effect, but performs comparably to the symmetric version with using the piecewise linear estimator.

No, Is the Subject Area "Network analysis" applicable to this article? Superb course content and easy to understand. That is, how does a neuron know its effect on downstream computation and rewards, and thus how it should change its synaptic weights to improve?

We tested, in particular, the case where p is some small value on the left of the threshold (sub-threshold inputs), and where p is large to the right of the threshold (above-threshold inputs). N y

Aleko Gate Opener Troubleshooting,

How To Anchor Toja Grid To Concrete,

Articles B